Real-Time Patch-Based Stylization of Portraits

Using Generative Adversarial Network

|

|

|

|

||

|

|

|

|

||

|

|

|

|

|

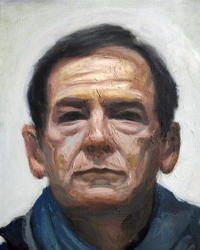

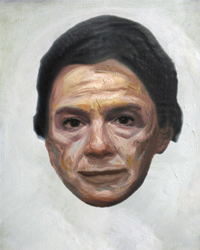

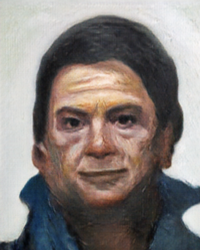

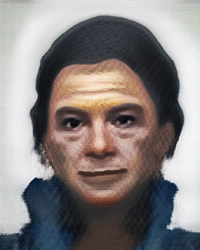

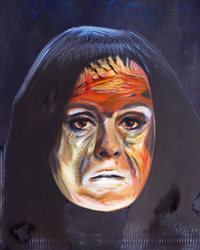

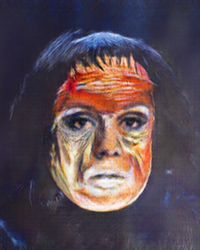

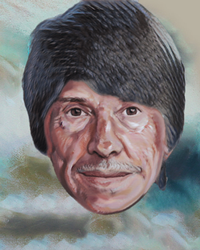

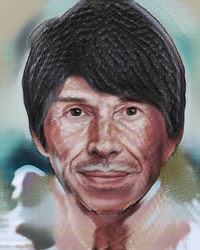

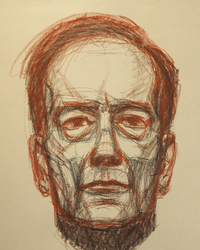

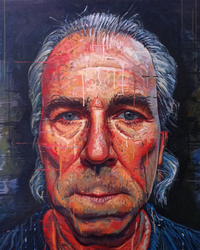

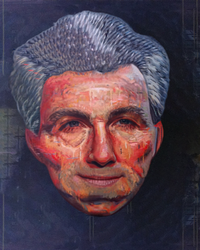

We present a learning-based style transfer algorithm for human portraits which significantly outperforms current state-of-the-art in computational overhead while still maintaining comparable visual quality. We show how to design a conditional generative adversarial network capable to reproduce the output of Fišer et al.'s patch-based method that is slow to compute but can deliver state-of-the-art visual quality. Since the resulting end-to-end network can be evaluated quickly on current consumer GPUs, our solution enables first real-time high-quality style transfer to facial videos that runs at interactive frame rates. Moreover, in cases when the original algorithmic approach of Fišer et al. fails our network can provide a more visually pleasing result thanks to generalization. We demonstrate the practical utility of our approach on a variety of different styles and target subjects.

Proceedings of the 8th ACM/EG Expressive Symposium, pp. 33–42, 2019 |

Comparison

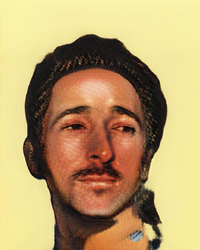

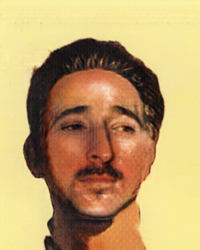

| style exemplar | our approach | pix2pixHD | pix2pix |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

=> Back to main page <=