Vědecké schůzky

čtvrtek 19.6. 2025, 10:00

Martin Káčerik, Jiří Bittner – UBVH: Unified Bounding Volume and Scene Geometry Representation for Ray Tracing

Zkušební prezentace na Hight Performance Graphics

Abstract: Bounding volume hierarchies (BVHs) are currently the most common data structure used to accelerate ray tracing. The existing BVH methods distinguish between the bounding volume representation associated with the interior BVH nodes and the scene geometry representation associated with leaf nodes. We propose a new method that unifies the representation of bounding volumes and triangular scene geometry. Our unified representation builds on skewed oriented bounding boxes (SOBB) that yield tight bounds for interior nodes and precise representation for triangles in the leaf nodes. This innovation allows to streamline the conventional massively parallel BVH traversal, as there is no need to switch between testing for ray intersection in interior nodes and leaf nodes. The results show that the proposed method accelerates ray tracing of incoherent rays between 1.2x–11.8x over the AABB BVH, 1.4x-4.2x over the 14-DOP BVH, 1.1x-2.0x over the OBB BVH, and by 1.1x–1.7x over the SOBB BVH.

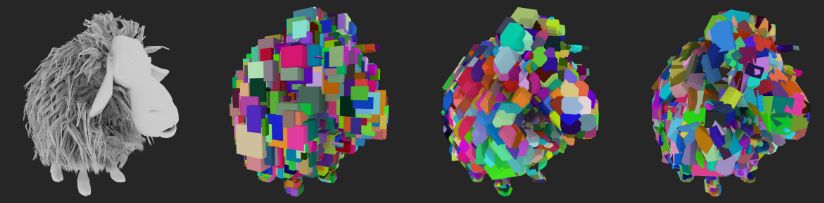

Radim Špetlík, David Futschik, Daniel Sýkora – StructuReiser: A Structure-preserving Video Stylization Method

Zkušební prezentace na Eurographics Symposium on Rendering

Abstract: We introduce StructuReiser, a novel video-to-video translation method that transforms input videos into stylized sequences using a set of user-provided keyframes. Unlike most existing methods, StructuReiser strictly adheres to the structural elements of the target video, preserving the original identity while seamlessly applying the desired stylistic transformations. This provides a level of control and consistency that is challenging to achieve with text-driven or keyframe-based approaches, including large video models. Furthermore, StructuReiser supports real-time inference on standard graphics hardware as well as custom keyframe editing, enabling interactive applications and expanding possibilities for creative expression and video manipulation.

úterý 6. 5. 2025, 14:30

Zkušební prezentace na Eurographics / Expressive

Jiří Minarčík (FJFI), Jakub Fišer, Daniel Sýkora – Example-Based Authoring of Expressive Space Curves

Abstract: In this paper we present a novel example-based stylization method for 3D space curves. Inspired by image-based arbitrary style transfer (e.g., Gatys et al. [2016]), we introduce a workflow that allows artists to transfer the stylistic characteristics of a short exemplar curve to a longer target curve in 3D-a problem, to the best of our knowledge, previously unexplored. Our approach involves extracting the underlying, unstyled form of the exemplar curve using a novel smoothing flow. This unstyled representation is then aligned with the target curve using a modified Fréchet distance. To achieve precise matching with reduced computational cost, we employ a semi-discrete optimization scheme, which outperforms existing methods for similar curve alignment problems. Furthermore, our formulation provides intuitive controls for adjusting stylization strength and transfer temperature, enabling greater creative flexibility. Its versatility also allows for the simultaneous stylization of additional attributes along the curve, which is particularly valuable in 3D applications where curves may represent medial axes of complex structures. We demonstrate the effectiveness of our method through a variety of expressive stylizations across different application contexts.

Alejandra Magana, Petr Felkel, Jiri Zara – Computer Graphics Instructors’ Intentions for Using Generative AI for Teaching

Abstract Background: Generative AI has significant potential to support learning processes, such as generating personalized content matching individual student needs. It also has the potential to support teaching processes by assisting instructors in generating content, assessing students, or supporting practice. This study investigates how computer graphics instructors have used generative AI or are planning to use generative AI to support their teaching. We implemented an anonymous online survey based on the Unified Theory of Acceptance and Use of Technology (UTAUT) methodology and distributed it among Eurographics members. The research questions were: (1) What are computer graphics instructors’ ways of integrating generative AI for teaching and learning purposes? (2) What are the influencing factors computer graphics instructors have considered for integrating generative AI for teaching and learning purposes?

Results: Between October 2024 and January 2025, we received 12 responses. Findings suggest that while some instructors have integrated generative AI into some aspects of their teaching, others have not and are hesitant to adopt them in the future, particularly as related to generating content for creating assignments such as lecture notes, summaries, teaching examples, etc., and supporting their assessment processes such as providing feedback, evaluating assignments, or grading exams. However, instructors were more open to using generative AI to support their teaching practices, particularly as related to pedagogy, such as providing students with interactive practice problems and supporting their creative content generation.

Conclusion: Findings from the study identified the level of acceptance among computer graphics instructors, primarily full professors, and their experiences and intentions for using generative AI. To get a better understanding of the adoption of generative AI in the field of computer graphics education, we would like to invite the community to share their experiences and future intentions via the survey, which will remain open for additional input.

Martin Káčerik, Jiří Bittner – SOBB: Skewed Oriented Bounding Boxes for Ray Tracing

Abstract: We propose skewed oriented bounding boxes (SOBB) as a novel bounding primitive for accelerating the calculation of ray-scene intersections. SOBBs have the same memory footprint as the well-known oriented bounding boxes (OBB) and can be used with a similar ray intersection algorithm. We propose an efficient algorithm for constructing a BVH with SOBBs, using a transformation from a standard BVH built for axis-aligned bounding boxes (AABB). We use discrete orientation polytopes as a temporary bounding representation to find tightly fitting SOBBs. Additionally, we propose a compression scheme for SOBBs that makes their memory requirements comparable to those of AABBs. For secondary rays, the SOBB BVH provides a ray tracing speedup of 1.0-11.0x over the AABB BVH and it is 1.1x faster than the OBB BVH on average. The transformation of AABB BVH to SOBB BVH is, on average, 2.6x faster than the ditetrahedron-based AABB BVH to OBB BVH transformation.

čtvrtek 24. 4. 2025 v 10:00

Zkušební prezentace na studentskou konferenci CESCG 2025:

Tereza Hlavová – Rendering Night Cities

Lukáš Cezner – Wide Bounding Volume Hierarchies for Ray Tracing

Matěj Sakmary – Resolution Matched Virtual Shadow Maps

čtvrtek 12. 9. 2024 v 10:30

Yulia Gryaditskaya (University of Surrey) – Human-centered approach to generative tasks: Sketch to X (invited talk)

Abstract: A generative AI model that produces high-quality output but lacks user control may be entertaining, but it falls short of practical utility. Text is a widely used input for many modern models targeting image, video, or 3D asset generation. Recently, there has been a growing focus on incorporating spatial inputs, with freehand sketches and doodles emerging as popular modalities. In this talk, I will explore the common challenges of working with sketches and designing effective tools. I will illustrate these challenges through two of our recent projects: (1) a 3D city block massing tool that uses freehand sketches (ECCV’24) and (2) a system for understanding freehand scene sketches (CVPR’24). I will also briefly discuss where we stand in adopting sketches as inputs to generative models.

Speaker info: https://yulia.gryaditskaya.com/

středa 11. 9. 2024 v 10:00

Michal Kučera – Towards Interactive, Robust, and Stereoscopic Style

Transfer (PhD thesis rehearsal)

Abstract: Since its inception in the early 2000s, the research field of style-transfer and automatic stylization has seen a steady rise in popularity up to a point where its algorithms are being employed by professional digital artists in their creation process, allowing them to quickly and conveniently stylize images or video sequences based on either a handmade or a generated example. Even though this research field has seen major strides in recent years, there are still substential issues and limitations preventing larger-scale utilization of such algorithms: limitations such as real-time or interactive stylization of either static images or video sequences, significant quality degradation in cases where example and target keyframes differ too much, temporal coherency of stylized video sequences, infeasible requirements for learning an image-to-image network, as well as stereoscopic applications of style-transfer algorithms remaining uncertain.

In this dissertation thesis we describe the current state-of-the-art in the field of example-based style transfer. Along with that, we propose a set of algorithms that allow interactive production of high quality real-time stylizations of video sequences, both based on semantically meaningful automatic style transfer and keyframe-based learning approaches, on which we introduce new methods to solve the difficult requirement of large paired datasets or domain-specific datasets. We also propose a new method that enables style transfer to still be possible when applied to a stereoscopic scenario.

In particular, we propose: (1) a neural method approximating results of a patch based style transfer method in real time, (2) an interactive method for real-time style transfer of video sequences, (3) a computationally inexpensive method for real-time stylization of facial videos even on low-end devices, (4) a video style-transfer method greatly improving the output quality and long-term coherence, and finally (5) a method able to achieve stereo-consistent style transfer of video sequences.

Combined together, this thesis makes important steps forward to high-quality, realtime, interactive, temporally and stereoscopically consistent style transfer.

středa 17. 7. 2024 v 11:00

Martin Káčerik, Jiří Bittner – SAH-Optimized k-DOP Hierarchies for Ray Tracing

Prezentace článku na HPG 2024

Abstract: We revisit the idea of using hierarchies of k-sided discrete orientation polytopes (k-DOPs) for ray tracing. We propose a method for building a k-DOP based bounding volume hierarchy while optimizing its topology using the surface area heuristic. The key component of our method is a fast and exact algorithm for evaluating the surface area of a 14-DOP combined with the parallel locally ordered clustering algorithm (PLOC). Our k-DOP PLOC builder has about 40% longer build times than AABB PLOC, but for scenes with oblong slanted objects, the resulting BVH provides up to 2.5𝑥 ray tracing speedup over AABB BVH. We also show that k-DOPs can be used in combination with other techniques, such as oriented bounding boxes (OBBs). Transforming k-DOP BVH into OBB BVH is straightforward and provides up to 12% better trace times than the transformation from AABB to OBB BVH.

středa 29. 11. 2023 v 10:00

Vojtěch Radakulan, David Sedláček – The Most Expensive Museum in the World: Three Player Cooperative Game Between VR and PC Platforms Investigating Empathy between Players and Historical Characters.

Prezentace clanku an SIGGRAPH Asia, Art Papers

Abstract: The paper describes an art installation based on a local cooperative cross platform VR/PC game for three players. The story is based on the historical story of a nuclear power plant, which was built, but never turned on because of a public referendum. The game mechanics is built upon interaction of three playable historical characters: engineer, activist, and politician. The main goal was to induce empathy in the players by replaying the game from each character’s perspective. We present the story implementation, custom made interfaces, physical setup, and an evaluation by recordings from four-month display in a public gallery.

středa 4. 10. 2023 v 10:00

Martin Káčerik – PVLI: Potentially Visible Layered Image for Real-Time Ray Tracing

Prezentace clanku z Visual Computer / CGI 2023 (15 min + diskuze)

středa 3. 5. 2023 v 10:00

Ivo Malý – Draw-Cut-Glue: Comparison of Paper House Model Creation in Virtual Reality and on Paper in Museum Education Programme – Pilot Study

Prezentace clanku na EG 2023 – Education (20 min + diskuze)

Ivo Malý, Iva Vachková, David Sedláček. Draw-Cut-Glue: Comparison of Paper House Model Creation in Virtual Reality and on Paper in Museum Education Programme – Pilot Study

Martin Káčerik – On the Importance of Scene Structure for Hardware-Accelerated Ray Tracing

Prezentace clanku na WSCG 2023 – communication papers (10 min + diskuze)

Martin Káčerik, Jiri Bittner – On the Importance of Scene Structure for Hardware-Accelerated Ray Tracing

Asbtract: Ray tracing is typically accelerated by organizing the scene geometry into an acceleration data structure. Hardware-accelerated ray tracing, available through modern graphics APIs, exposes an interface to the acceleration structure builder that builds it, given the input scene geometry. This builder is, however, opaque, with limited control over the internal building algorithm. Additional control is available through the layout of builder input data, a geometry of the scene structured in a user-defined way. In this work, we evaluate the impact of a different scene structuring on the performance of the ray-triangle intersections in the context of hardware-accelerated ray tracing. We discuss the possible causes of significantly different outcomes for the same scene and outline a solution in the form of automatic input structure optimization.