Leveraging Reward Regularization in Imperfect Information Games

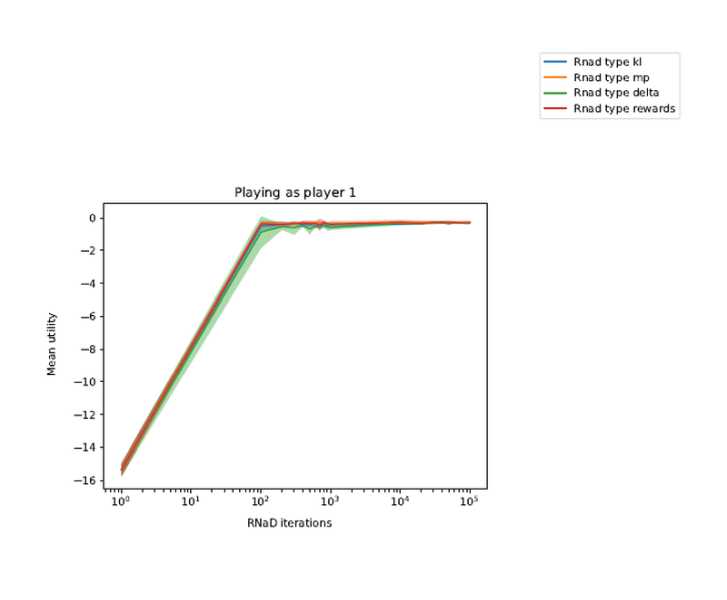

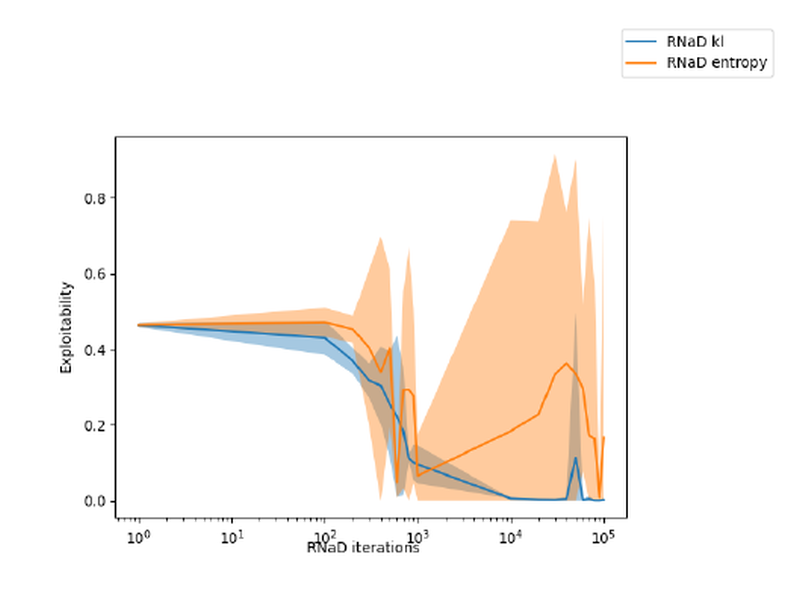

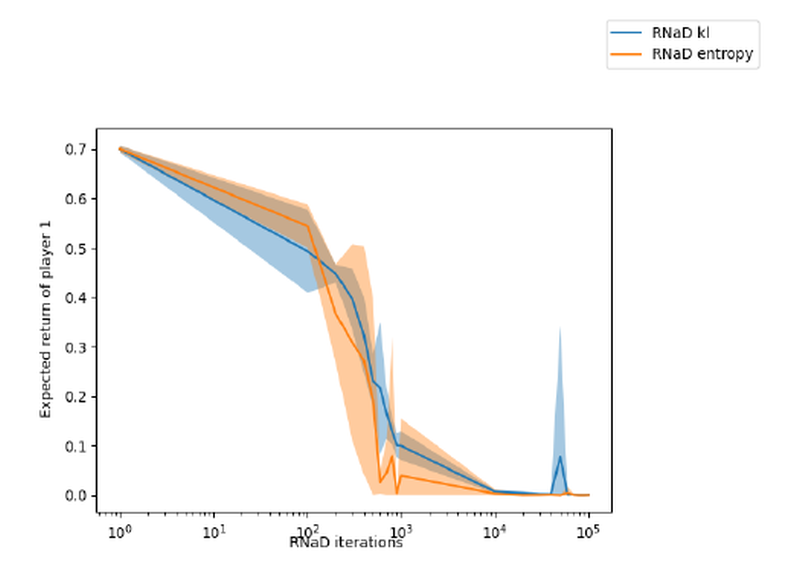

Reward regularization proved to be a powerful technique in reinforcement learning algorithms for solving imperfect information games. One such algorithm using this technique is the recently developed Regularized Nash Dynamics (RNaD), which achieved a human-expert level performance in the game Stratego. However, research about this technique has focused on two-player zero-sum games, and it is currently unknown if this technique proves useful even in a broader class of games like general-sum or multiplayer games. Hence, this work aims to devise a more generally applicable extension of these techniques and modify the developed algorithms into a new class of games. The effectiveness of these modifications is shown in several experiments on pursuit-evasion games.