Example-Based Synthesis of Stylized Facial Animations

ACM Transactions on Graphics (Proceedings of SIGGRAPH 2017) 36(4):155, 2017

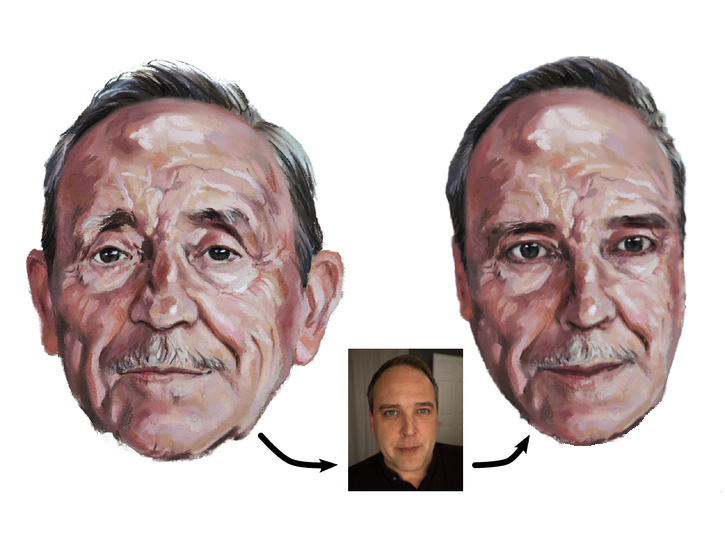

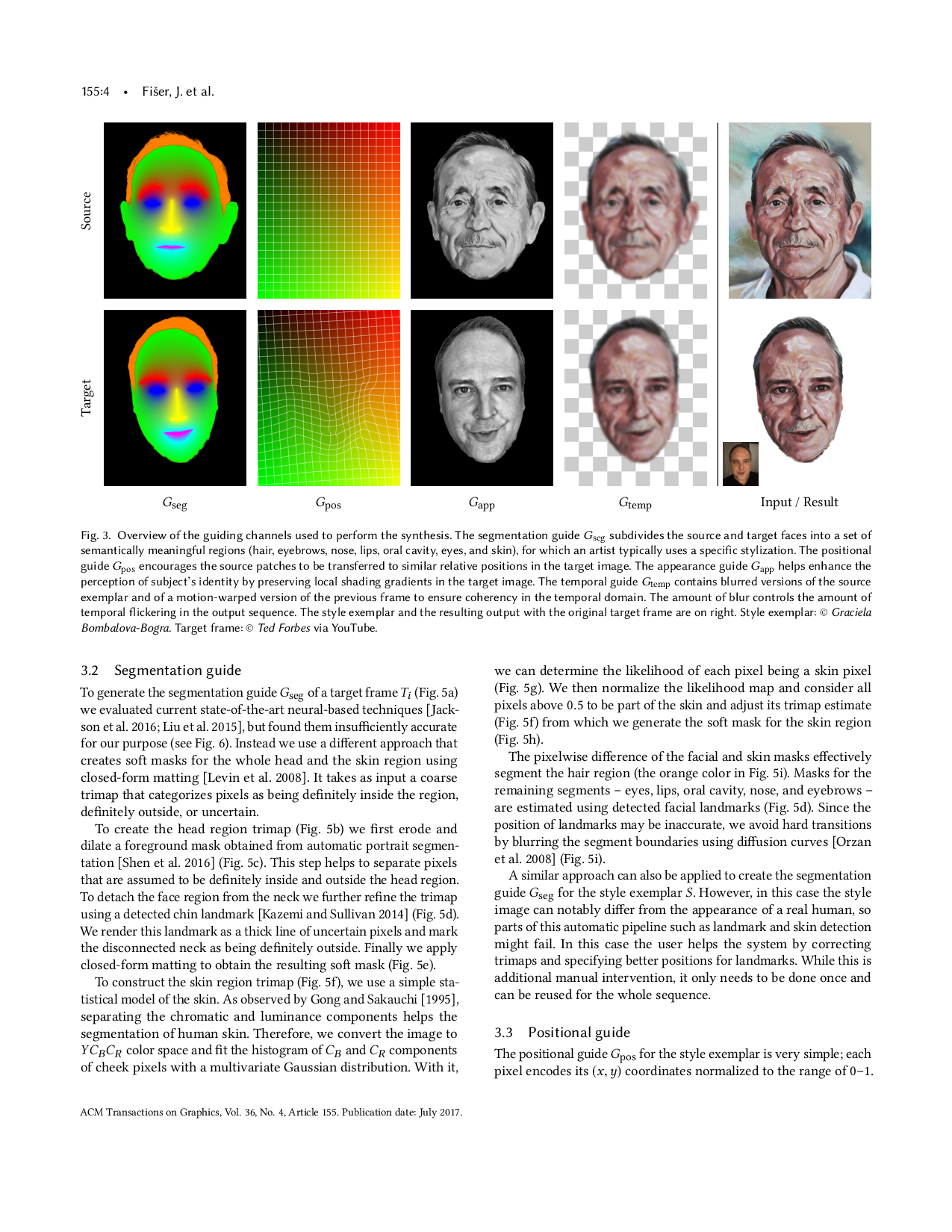

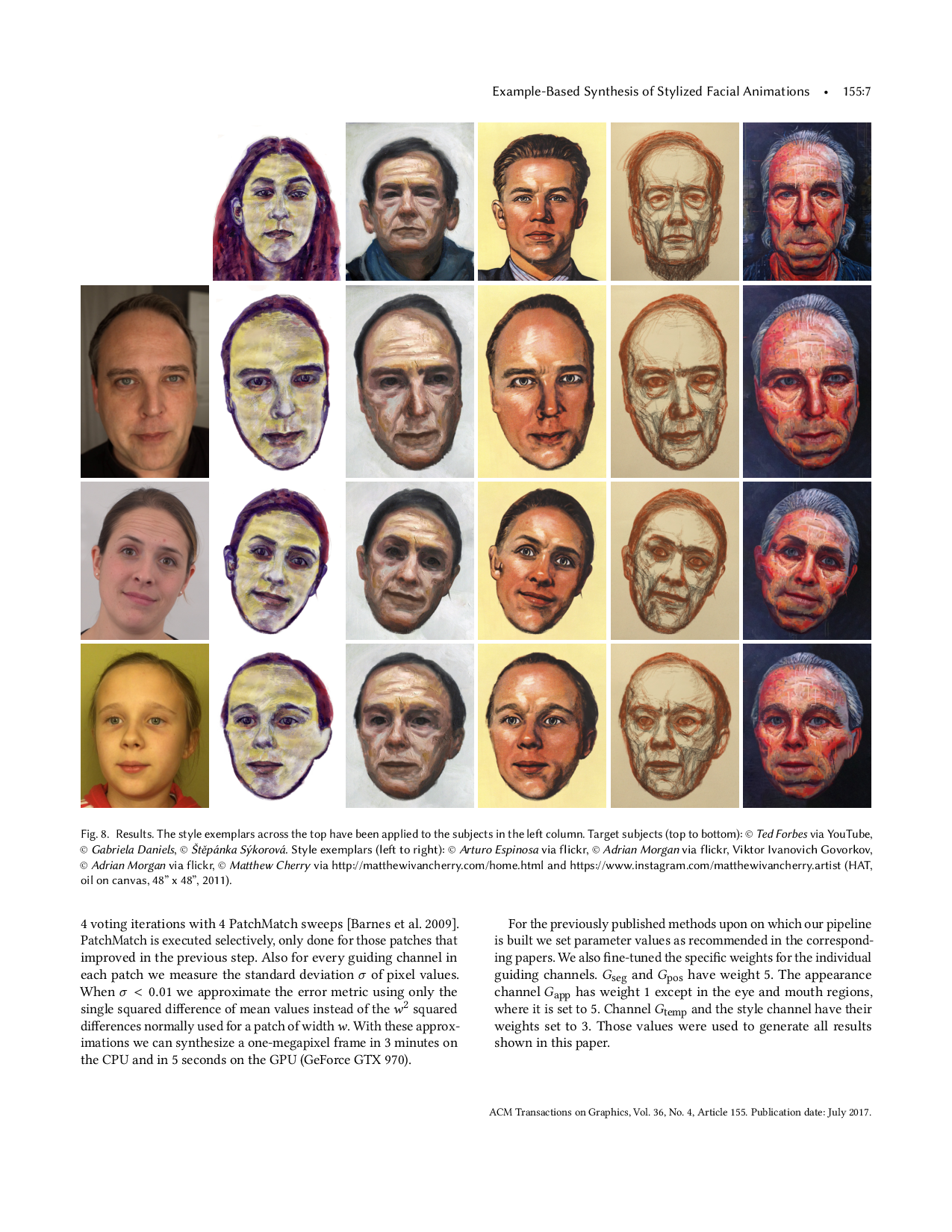

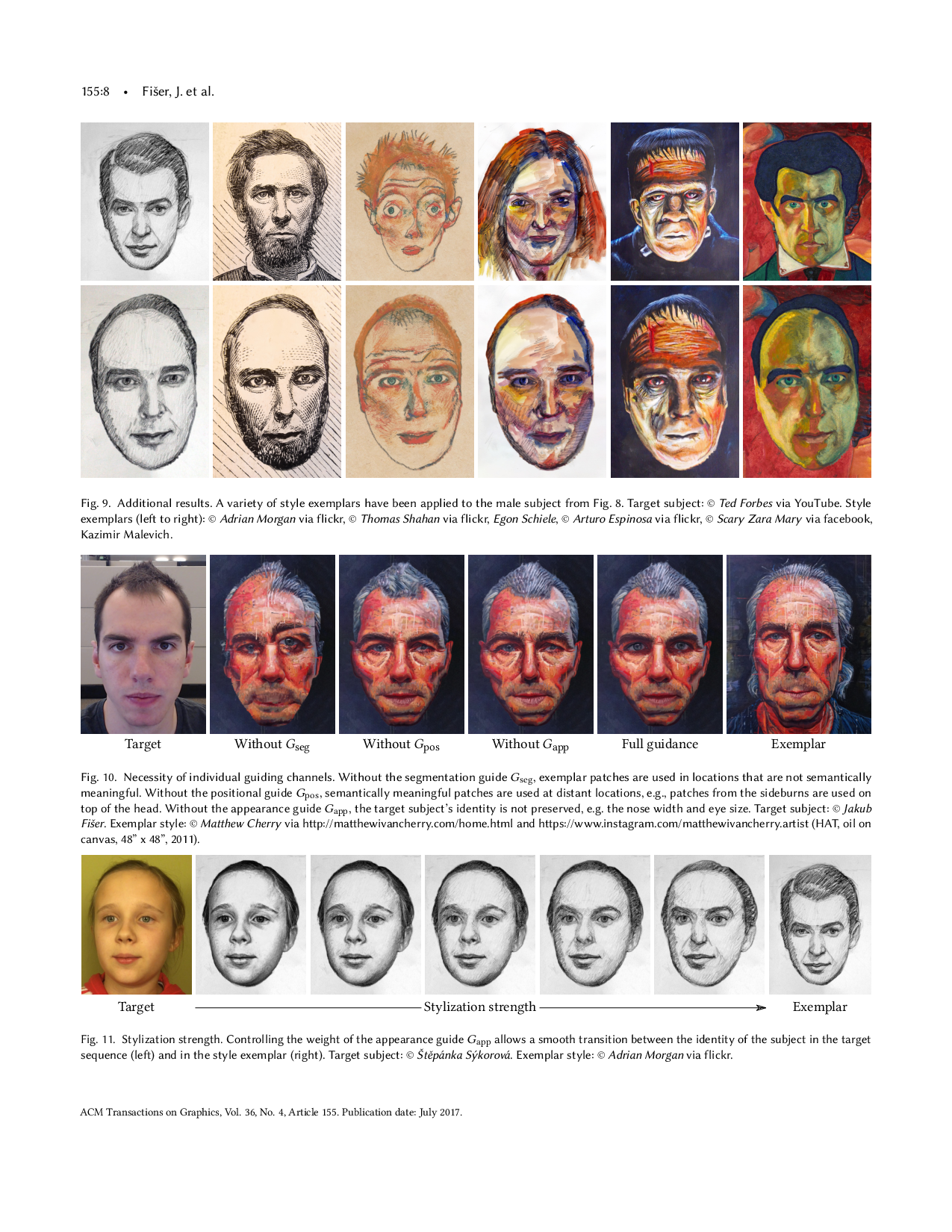

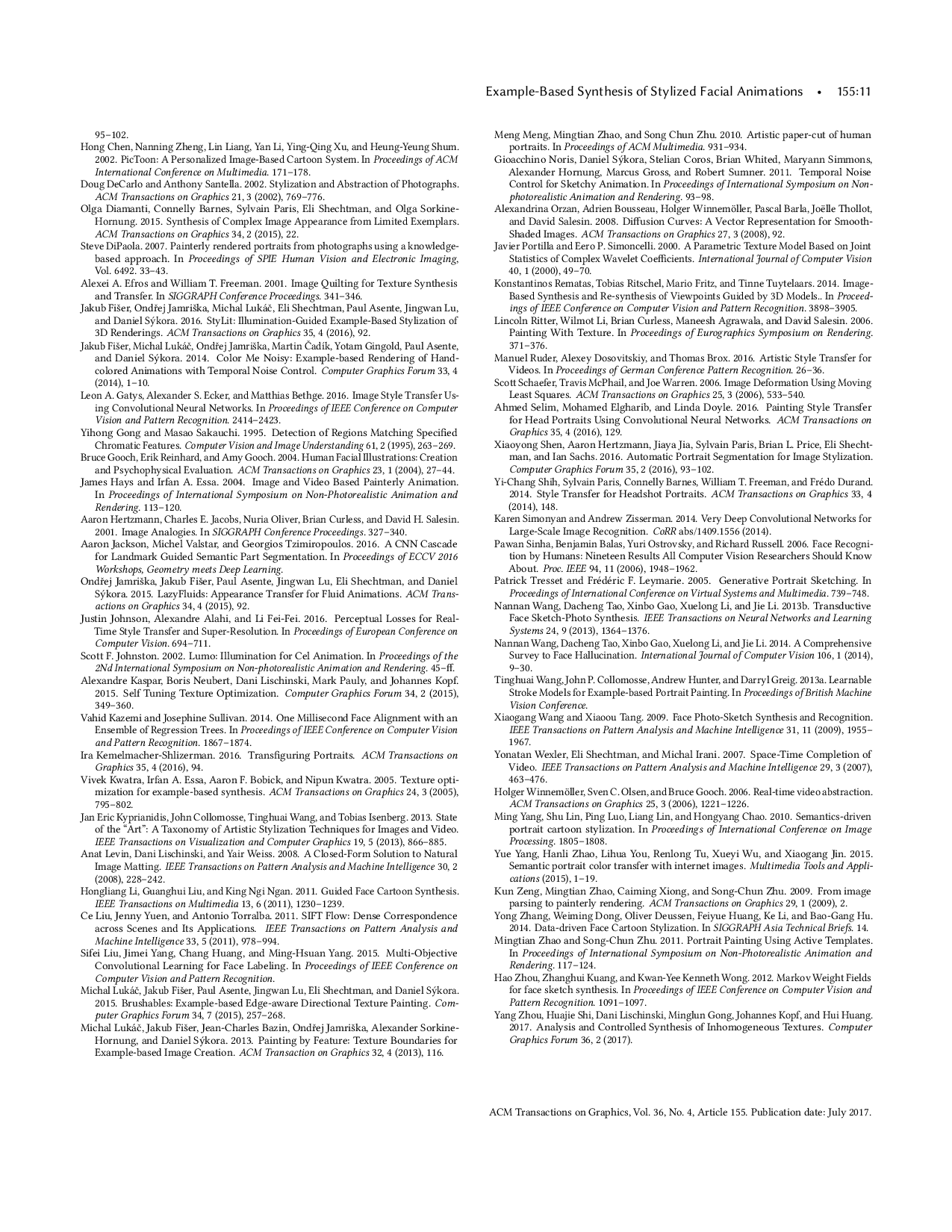

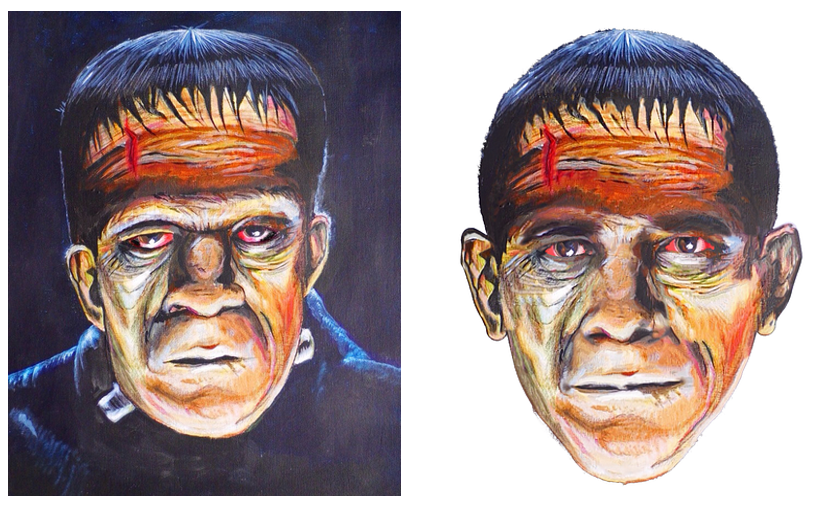

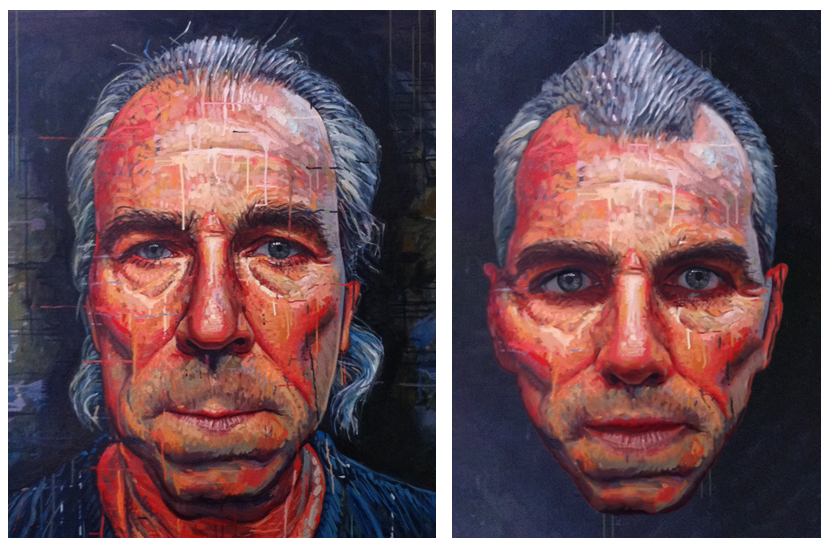

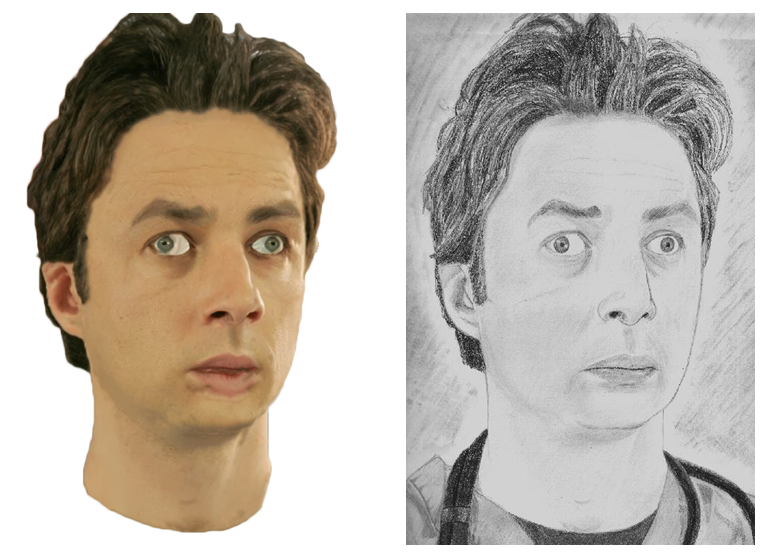

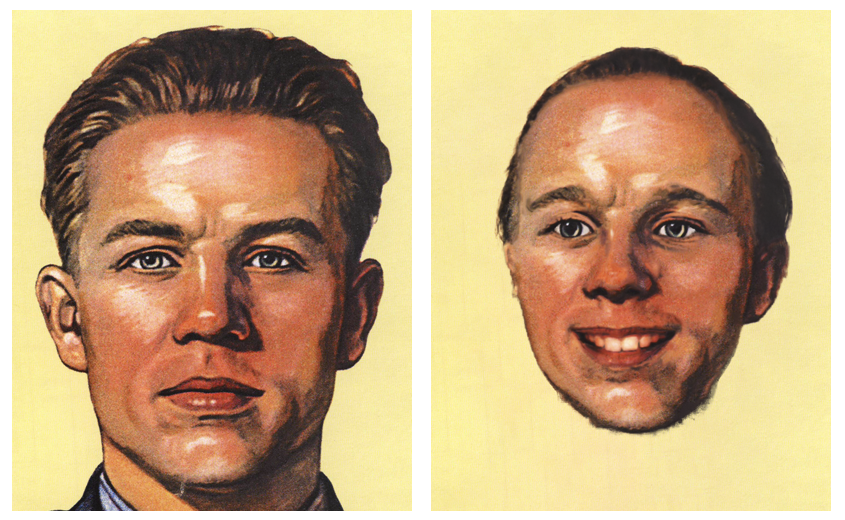

We introduce a novel approach to example-based stylization of portrait videos that preserves both the subject's identity and the visual richness of the input style exemplar. Unlike the current state-of-the-art based on neural style transfer [Selim et al. 2016], our method performs non-parametric texture synthesis that retains more of the local textural details of the artistic exemplar and does not suffer from image warping artifacts caused by aligning the style exemplar with the target face. Our method allows the creation of videos with less than full temporal coherence [Ruder et al. 2016]. By introducing a controllable amount of temporal dynamics, it more closely approximates the appearance of real hand-painted animation in which every frame was created independently. We demonstrate the practical utility of the proposed solution on a variety of style exemplars and target videos.